Texture synthesis

Texture synthesis attempts to, given an input texture image, produce an output texture image that is both visually similar and pixel-wise different from the input texture. The output image should ideally be perceived as another part of the same large piece of homogeneous material from which the input texture originated. This practical session explains how to implement the Texture Synthesis through optimization based on the algorithm described on L. Gatys, A. S. Ecker, and M. Bethge. Texture synthesis using convolutional neural networks. In Advances in Neural Information Processing Systems, pages 262–270, 2015. 4.

Image: texture synthesis (Grapes)

Texture synthesis with Maximum Entropy

This practical session implements the texture synthesis algorithm developed on Maximum entropy methods for texture synthesis: theory and practice, V. De Bor toli, A. Desolneux, A. Dur mus, B. Galerne, A. Leclaire, SIAM Jour nal on Mathematics of Data Science (SIMODS), 2021.

Image: texture synthesis (Cofee beans)

Texture interpolation

Texture interpolation or mixing consists of generating new textures by mixing different examples of textures. This practical session explains how to implement the Texture Interpolation between arbitrary textures based on the algorithm described on J. Vacher, A. Davila, A. Kohn, and R. Coen-Cagli,Texture interpolation for probingvisual perception, Advances in Neural Information Processing Systems, (2020).

Image: texture interpolation (leaf of Tannat and water of Tulum)

Style transfer

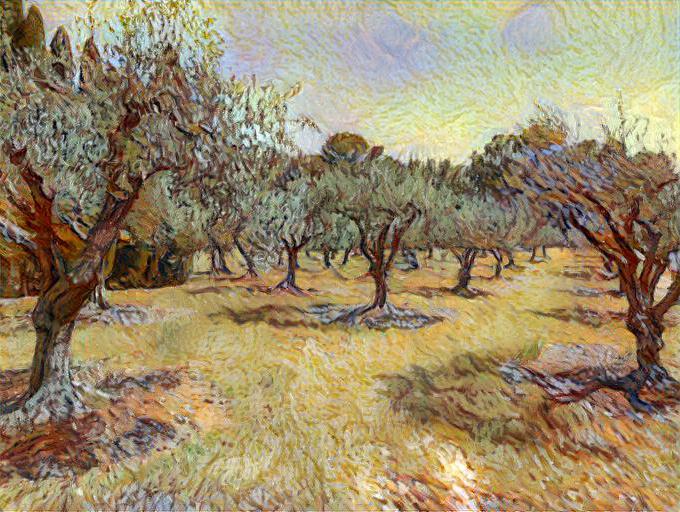

Style transfer permits to take an image and reproduce it with a new artistic style. The algorithm takes three images, an input image, a content image, and a style image, and changes the input to resemble the content of the content-image and the artistic style of the style-image. This practical session explains how to implement the Neural style transfer based on the algorithm developed on L. Gatys, A. Ecker and M. Bethge, Image style transfer using convolutional neural networks, Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pp. 2414-2423, Jun. 2016..

Image: style transfer (Content: Olives. Style: Van Gogh)

Gaussian texture synthesis and mixing

This practical session shows how to obtain texton and Gaussian synthesis of color and gray textures, as well as texture mixing.

Image: texture mixing (wall and water of Tulum)

DCGANs

This practical session explains how to use and train DCGANs as generative models. Specifically, we will use DCGANs to generate images as MNIST digits.

Image: Digits generated with the DCGAN network

Texture synthesis with GMMOT

This practical session implements the texture synthesis algorithm developed in Arthur Leclaire, Julie Delon, Agnès Desolneux. Optimal Transport Between GMM for multiscale Texture Synthesis. 2022. hal-03613622

Image: texture synthesis

Image synthesis with line segments constraints

This practical session implements the image synthesis algorithm developed in A. Desolneux, When the a contrario approach becomes generative, IJCV, 2016

Image: image reconstruction with line segments